Imagine you’re standing at a crossroads, trying to decide which path will lead you to success. One route looks promising, but the other seems equally compelling. How do you choose? In the digital marketing world, you don’t have to guess you can test both paths and let data reveal the winner. This is the fundamental principle behind A/B testing.

Every day, businesses lose thousands of potential customers because of small decisions:

The color of a button, the wording of a headline, or the placement of a call-to-action. These seemingly minor details can make the difference between a visitor clicking “Buy Now” or abandoning your site forever. The good news? You don’t need to rely on intuition or guesswork anymore.

A/B testing, also known as split testing, is a scientific approach that helps marketers, business owners, and digital marketing professionals make data-driven decisions. Whether you’re optimizing landing pages, email campaigns, or website elements, understanding variant testing is crucial for maximizing your conversion rate optimization efforts.

In this comprehensive guide, we’ll break down everything you need to know about A/B testing in simple, actionable terms. By the end, you’ll understand not just what it is, but how to implement it effectively to grow your business.

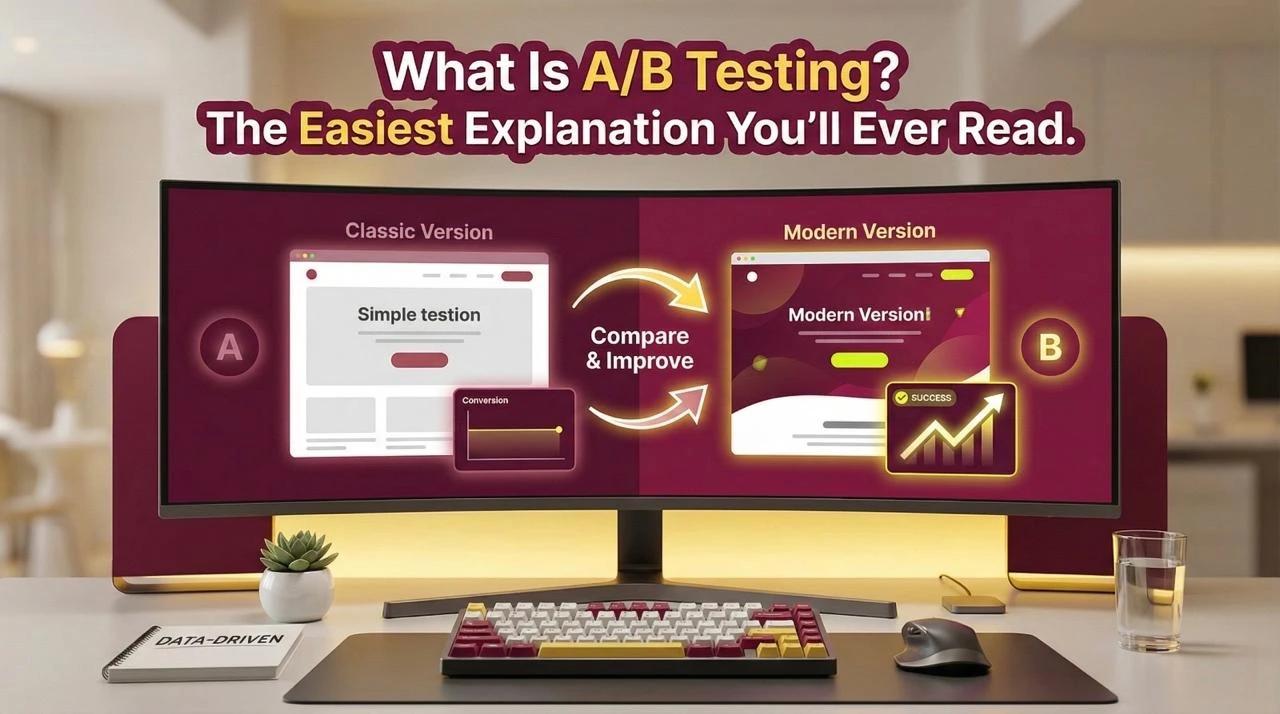

A/B testing is a controlled experiment where you compare two versions of a webpage, email, or marketing asset to determine which one performs better.

Version A (the control) competes against Version B (the variation) to see which drives more conversions, clicks, or engagement.

Here’s how the testing methodology works in practice:

The beauty of split testing lies in its simplicity. You’re not making sweeping changes to your entire website. Instead, you’re testing one variable at a time, which allows you to pinpoint exactly what works and what doesn’t.

For businesses focused on conversion rate optimization, A/B testing eliminates the guesswork. Rather than redesigning your entire website based on assumptions, you make incremental improvements backed by real user data. This methodical approach ensures that every change you implement moves the needle.

In today’s competitive digital landscape, minor improvements can translate into massive revenue gains. Consider this if your website converts at 2% and you increase that to 3% through split testing, you’ve just boosted your revenue by 50% without spending an extra dollar on advertising.

Many businesses operate under dangerous assumptions. They believe they know what their customers want, but the data often tells a different story. A/B testing removes the bias and reveals the truth. Here’s why it matters:

Data-Driven Decision Making: Instead of relying on the highest-paid person’s opinion (HIPPO effect), your base decisions on actual user behavior. Your control group provides the benchmark, while test variations show you what resonates with your audience.

Cost-Effective Optimization: Acquiring new customers through paid advertising is expensive. Variant testing helps you maximize the value of traffic you already have, effectively lowering your customer acquisition costs.

Risk Mitigation: Rolling out major website changes without testing can be disastrous. Split testing allows you to validate ideas before implementing them site-wide, protecting your existing conversion rates.

Continuous Improvement: Conversion rate optimization is not a one-time project, it’s an ongoing process. Regular A/B testing creates a culture of continuous improvement where small wins compound over time.

According to recent industry research from VWO, companies that consistently run split testing experiments see significant conversion improvements compared to those that don’t test at all. This isn’t magic, it’s the power of testing methodology applied systematically.

The applications of A/B testing extend far beyond website tweaks. Marketers use variant testing for:

Companies like Amazon, Netflix, and Google run thousands of split testing experiments simultaneously, constantly refining their user experience based on data.

Not all A/B testing experiments are created equal. To get meaningful results, you need to understand the fundamental components that make testing methodology effective.

This is where many beginners stumble. Statistical significance ensures that your test results aren’t due to random chance. Imagine flipping a coin twice if it lands on heads both times, you wouldn’t conclude the coin is biased, you need more data.

The same principle applies to split testing. If Version B gets 5 more conversions than Version A, is that meaningful or just luck? Statistical significance tells you the answer. Most experts recommend reaching at least 95% confidence before declaring a winner.

Sample size matters enormously here. Testing with 50 visitors per variant won’t give you reliable insights. You typically need hundreds or thousands of visitors in your control group and test group to reach statistical validity.

The most common mistake in A/B testing is trying to test too many things at once. When you change multiple variables simultaneously, you can’t determine which change caused the improvement. This is why variant testing should be focused and methodical.

Start with elements that have the biggest potential impact:

High-Impact Areas:

Medium-Impact Areas:

Low-Impact Areas:

Focus your initial split testing efforts on high-impact areas. Once you’ve optimized the major elements, you can move to more granular tests.

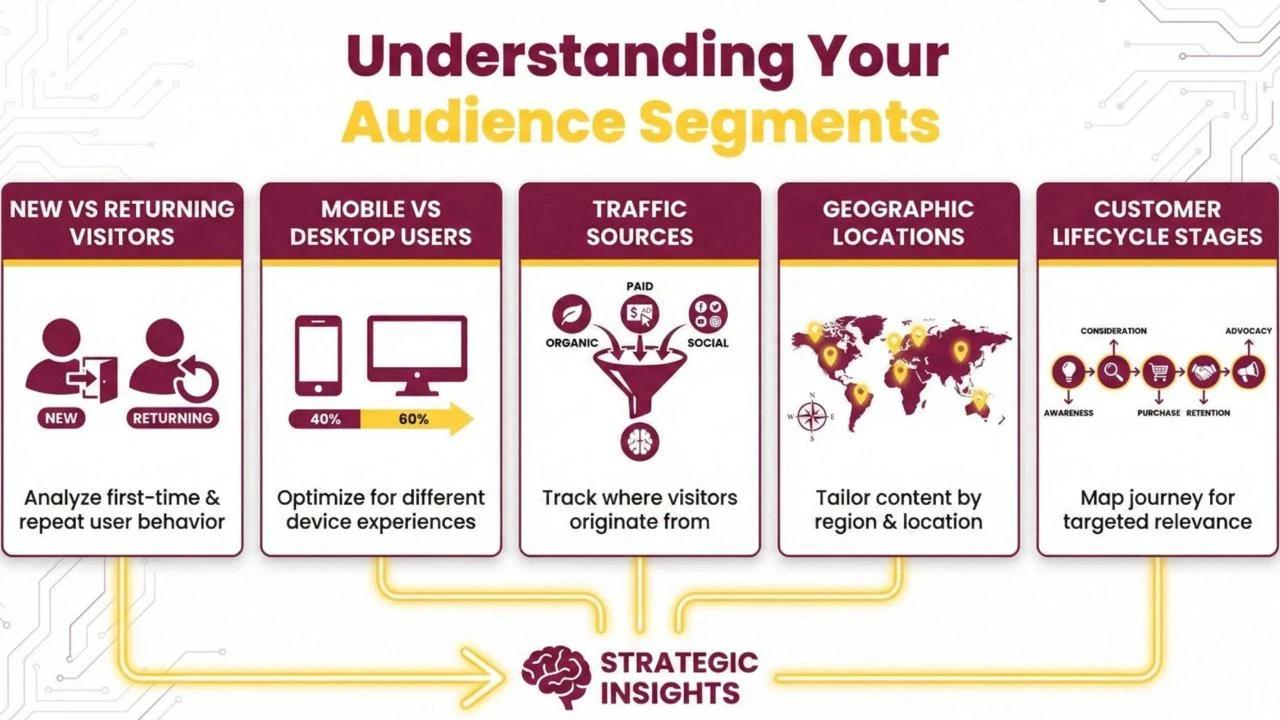

Not all visitors are the same. A change that improves conversions for new visitors might decrease conversions for returning customers. Advanced conversion rate optimization strategies involve segmenting your audience and running targeted variant testing experiments for different groups:

This segmented approach to A/B testing provides deeper insights and prevents you from making changes that help one group while hurting another.

Ready to start your first split testing experiment? Follow this proven framework that combines testing methodology with practical execution.

Every A/B testing experiment needs a clear, measurable goal. Vague objectives like “improve the website” won’t cut it. Instead, define specific metrics:

Your goal determines how you measure success and when to conclude the test. For conversion rate optimization, focus on outcomes that directly impact revenue.

A hypothesis is your educated guess about what will improve performance. Good hypotheses follow this format:

Changing [variable] from [current state] to [new state] will [increase/decrease] [metric] because [reasoning].

For example: Changing the call-to-action button color from green to orange will increase click-through rates by 10% because orange creates more visual contrast against our blue background.

This approach to variant testing ensures you’re learning from every experiment, not just running random tests.

Now build your test variations. Remember the golden rule of split testing – change only one variable at a time. If you change both the headline and the button color, you won’t know which change drove the results.

Your control group sees the original version (Version A). Your test group sees the new version (Version B). Make sure both versions are live simultaneously, running them sequentially introduces timing variables that can skew results.

Use an online A/B testing calculator to determine how many visitors you need for statistically valid results. Factors that influence sample size include:

Don’t stop your testing methodology prematurely. Many businesses declare a winner after a few days, only to see the results reverse when more data comes in. Patience is crucial for valid split testing.

Launch your experiment and let it run until you reach statistical significance. During this time:

Most variant testing tools will automatically split traffic and track conversions. Popular platforms include Google Optimize, Optimizely, VWO, and Convert.

Once you’ve reached statistical significance, analyze your data carefully. Look beyond just the primary metrics, sometimes a change that improves conversions might decrease average order value or customer quality.

Consider these questions:

If you have a clear winner, implement it site-wide. But don’t stop there, successful conversion rate optimization involves continuous A/B testing. Each experiment should inform your next hypothesis.

Document every test, even failures. Some of the most valuable insights come from understanding what didn’t work and why.

Even experienced marketers make errors that invalidate their split testing results. Avoid these pitfalls to ensure your variant testing provides accurate insights.

This is the most frequent mistake. When you change five elements simultaneously, you have no idea which change caused the improvement. Stick to single-variable tests, especially when starting out with testing methodology.

Declaring a winner after 24 hours or 50 conversions is tempting, especially when results look promising. But premature conclusions lead to false positives. Always wait for statistical significance before making decisions about your A/B testing experiments.

Did you start a major advertising campaign during your test? Was there a holiday or seasonal event? External factors can dramatically impact conversion rate optimization results. Try to run split testing experiments during “normal” periods, or at least account for external variables in your analysis.

Running one A/B testing experiment every six months won’t transform your business. Companies that see the biggest impact from variant testing run multiple simultaneous tests and maintain a consistent testing calendar.

Don’t waste time testing button shapes when your headline or value proposition needs work. Focus your testing methodology on high-impact elements before optimizing minor details.

Once you’ve mastered basic split testing, these advanced techniques can take your conversion rate optimization to the next level.

While A/B testing compares two versions, multivariate testing examines multiple variables simultaneously. It’s more complex but can reveal interactions between elements. For example, you might discover that a red button works best with one headline, while a blue button performs better with another.

However, multivariate testing requires significantly more traffic. Each combination needs sufficient data to reach statistical significance, making this variant testing approach suitable only for high-traffic websites.

Rather than running all variations simultaneously, sequential testing methodology involves testing one change, implementing the winner, then testing another change. This approach is slower but works well for low-traffic sites that can’t split their audience into multiple segments.

Advanced conversion rate optimization platforms allow you to test personalized experiences. Instead of showing everyone the same variants, you can serve different versions based on user attributes: location, device, referral source, or past behavior.

This sophisticated approach to A/B testing requires robust analytics infrastructure but can deliver substantial improvements for businesses with diverse customer bases.

Choosing the right platform for your split testing experiments depends on your budget, technical capabilities, and testing frequency.

Google Optimize (Free): Integrates seamlessly with Google Analytics, making it ideal for beginners. The free version supports basic variant testing with reasonable traffic limits. Learn more about Google Optimize setup.

Optimizely (Premium): Enterprise-level platform offering advanced features including multivariate tests, personalization, and detailed analytics. Best for companies running extensive conversion rate optimization programs. Explore Optimizely’s A/B testing capabilities.

VWO (Visual Website Optimizer): User-friendly interface with visual editors that don’t require coding. Good middle-ground option with strong testing methodology features.

Convert (Mid-tier): Focuses on privacy-compliant testing, important for businesses concerned about data regulations while conducting A/B testing.

Adobe Target (Enterprise): Part of Adobe Experience Cloud, offering sophisticated personalization and variant testing for large organizations.

Your A/B testing platform should track:

Comprehensive tracking ensures you understand not just if something worked, but why it worked, informing future split testing efforts.

Understanding how other companies apply testing methodology can inspire your own conversion rate optimization strategy.

An online retailer tested checkout page variations, simplifying from a four-step to a one-page checkout. The result? A 21% increase in completed purchases. This single A/B testing experiment generated millions in additional annual revenue.

A software company tested their pricing page, replacing a feature comparison table with a simple three-tier pricing structure. Conversions increased 41% because the simplified presentation reduced decision paralysis—a key insight from their variant testing program.

A news website tested headline formulas using split testing. They discovered that questions performed 28% better than declarative statements for their audience. This insight transformed their entire content strategy, proving that systematic A/B testing delivers compounding benefits.

For conversion rate optimization to become a competitive advantage, it must be embedded in your organizational culture. Here’s how to build a testing-first mindset.

Executives need to understand that split testing isn’t about finding quick wins, it’s about building a data-driven decision-making framework. Share case studies and potential ROI to gain support for sustained variant testing investment.

Don’t test randomly. Develop a prioritized backlog of A/B testing hypotheses based on:

This organized approach to testing methodology ensures you focus on experiments that matter.

Maintain a central repository of all split testing experiments: hypotheses, methodologies, results, and learnings. This institutional knowledge prevents repeated mistakes and helps team members learn from past A/B testing efforts.

Not every variant testing experiment will succeed, and that’s perfectly fine. Failed tests provide valuable insights. Create a culture where teams feel safe proposing bold hypotheses without fear of failure.

As technology evolves, so does the sophistication of conversion rate optimization and variant testing approaches.

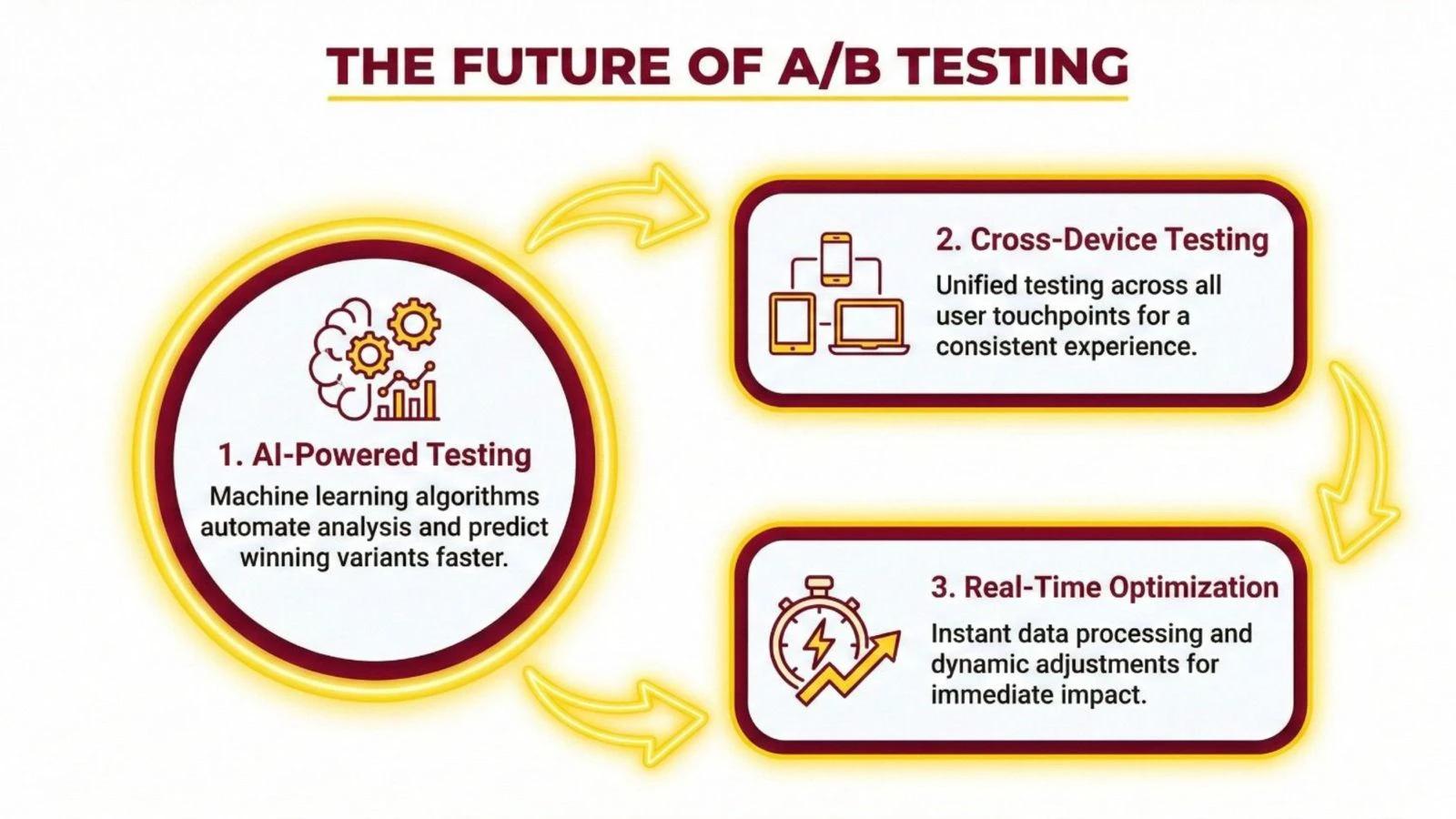

Machine learning algorithms can now suggest split testing hypotheses, predict winning variations, and automatically allocate traffic to better-performing options. These AI-driven A/B testing tools accelerate the optimization process dramatically.

Users increasingly interact with brands across multiple devices. Future testing methodology will need to account for these cross-device journeys, understanding how desktop tests impact mobile conversions and vice versa.

Rather than running week-long tests, emerging platforms enable real-time variant testing that instantly adjusts based on performance. This dynamic approach to conversion rate optimization delivers faster results.

A/B testing isn’t complicated, it’s simply the scientific method applied to digital marketing. By comparing different versions and measuring results, you replace assumptions with evidence. Every test teaches you something valuable about your audience, even when the results surprise you.

The businesses that dominate their markets aren’t necessarily the ones with the biggest budgets. They’re the ones that systematically test, learn, and improve. Through consistent split testing and a commitment to conversion rate optimization, you can make incremental gains that compound into significant competitive advantages.

Don’t wait for the perfect hypothesis or the ideal testing scenario. Start small, test one element, gather data, and iterate. Your first variant testing experiment might not revolutionize your business, but it will start a journey toward data-driven decision making that transforms how you operate.

The question isn’t whether you can afford to invest in A/B testing, it’s whether you can afford not to. Your competitors are already testing. Your customers are already telling you what works through their behavior. The only question is: are you listening?

Understanding A/B testing is just one piece of the digital marketing puzzle. If you’re serious about building a career in this dynamic field, you need comprehensive training that goes beyond theory.

WHY TAP, India’s first AI-powered IT training institute, we offer hands-on digital marketing courses that teach you not just A/B testing, but the complete conversion rate optimization framework used by leading companies. Our programs include:

Whether you’re a student, job seeker, or professional looking to upskill, our Digital Marketing course provides the practical skills employers demand. You’ll learn to design testing methodologies, analyze data, and make decisions that drive real business results.

Don’t just learn about A/B testing, master it. Join thousands of successful graduates who transformed their careers through WHY TAP’s comprehensive programs.

Explore our Digital Marketing courses now and get started with hands-on training, live projects, and guaranteed placement support.